Starlet #8 Prompt flow - Developer Tool for Building High-Quality LLM Applications

This is the eighth issue of The Starlet List. If you want to prompt your open source project on star-history.com for free, please check out our announcement.

Open-sourced by Microsoft recently, Prompt flow is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

Why

The utilization of Large Language Models (LLMs), such as ChatGPT and GPT-4, to enhance existing software or create new applications has become a pivotal strategy for many developers. This trend has been largely catalyzed by the early access Microsoft had to OpenAI's models, which allowed them to pioneer in this domain. One significant shift that LLMs introduce to application development is the increased stochastic nature of applications. This means that traditional testing methods may not be adequate or even applicable. The project 'prompt flow' represents Microsoft's efforts to incorporate their insights on building quality into the process of LLM application development.

How

Prompt flow provides a suite of developer tools including:

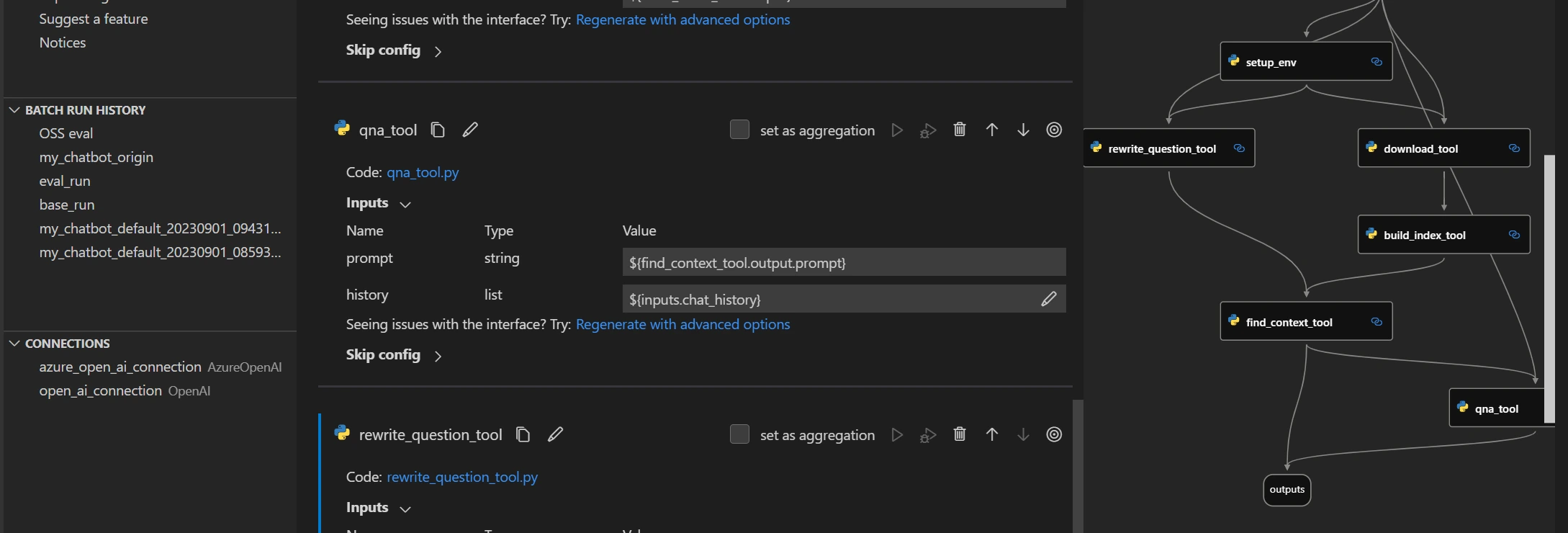

- A VS Code extension that allows for visual development and testing of the flow within VS Code. Although it may initially appear as a low-code solution for creating static "graphs", it actually allows you to program within different Python nodes with as much flexibility as you need. This enables you to fully utilize the quality assurance capabilities that Prompt Flow offers.

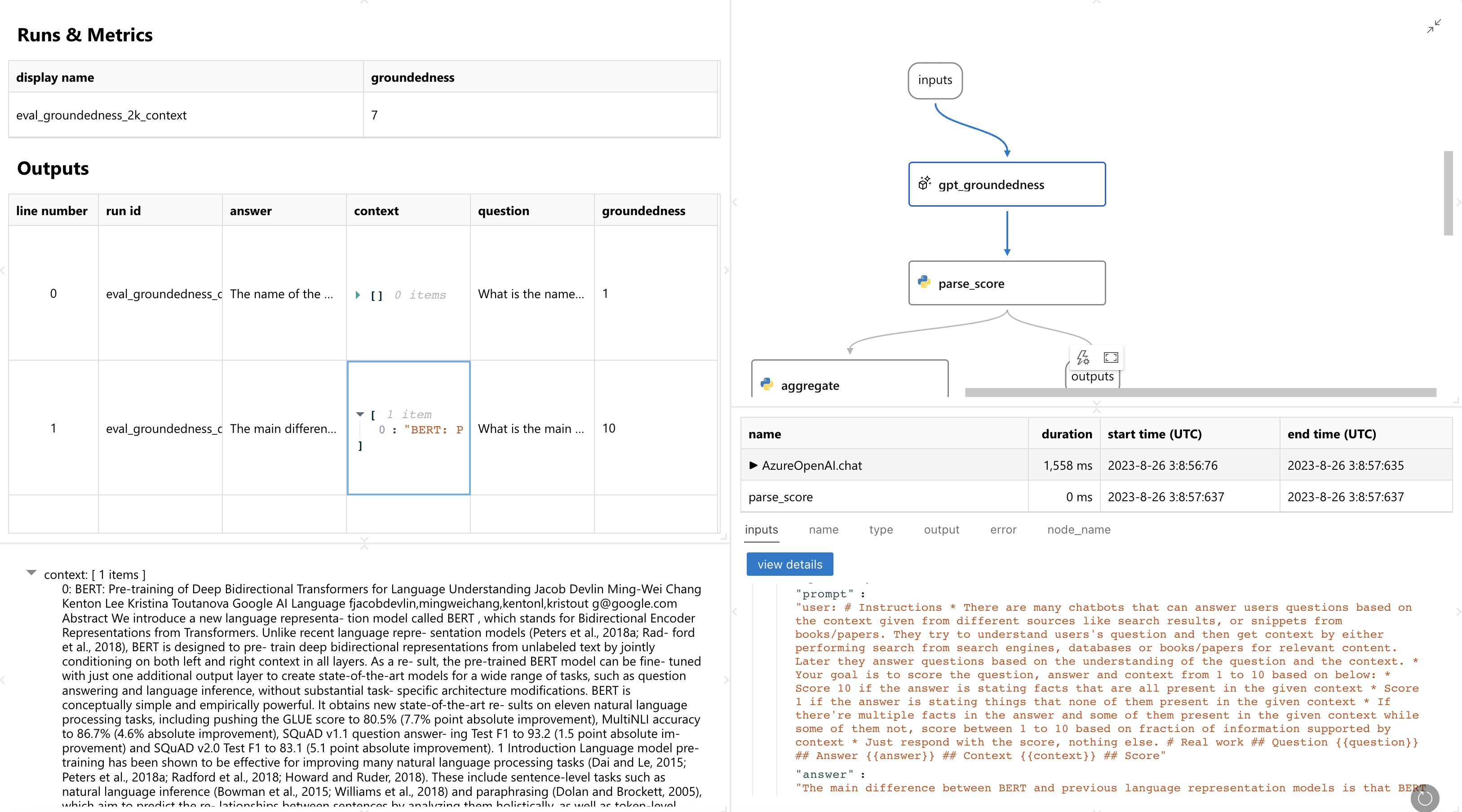

- A Python SDK/CLI that facilitates experimentation with batch tests and evaluations, and offers a clear visualization of test results and intermediate outcomes. This tool can also be integrated into your CI/CD process.

- Built-in support for prompt tuning is another feature that simplifies the process of testing multiple prompts. It allows you to easily select the best prompt using metrics.

- Flexible deployment support that enables you to package your flow as a Docker container, which can then be run on various cloud platforms. Additionally, you can integrate the flow into your existing Python application using the SDK.9/17/2023, 12:42:47 PMSend

Moreover, a cloud-based version of Prompt flow is available in Azure AI, which incorporates enterprise features and facilitates team collaboration on Azure. It is currently in public preview. You can find more details here.

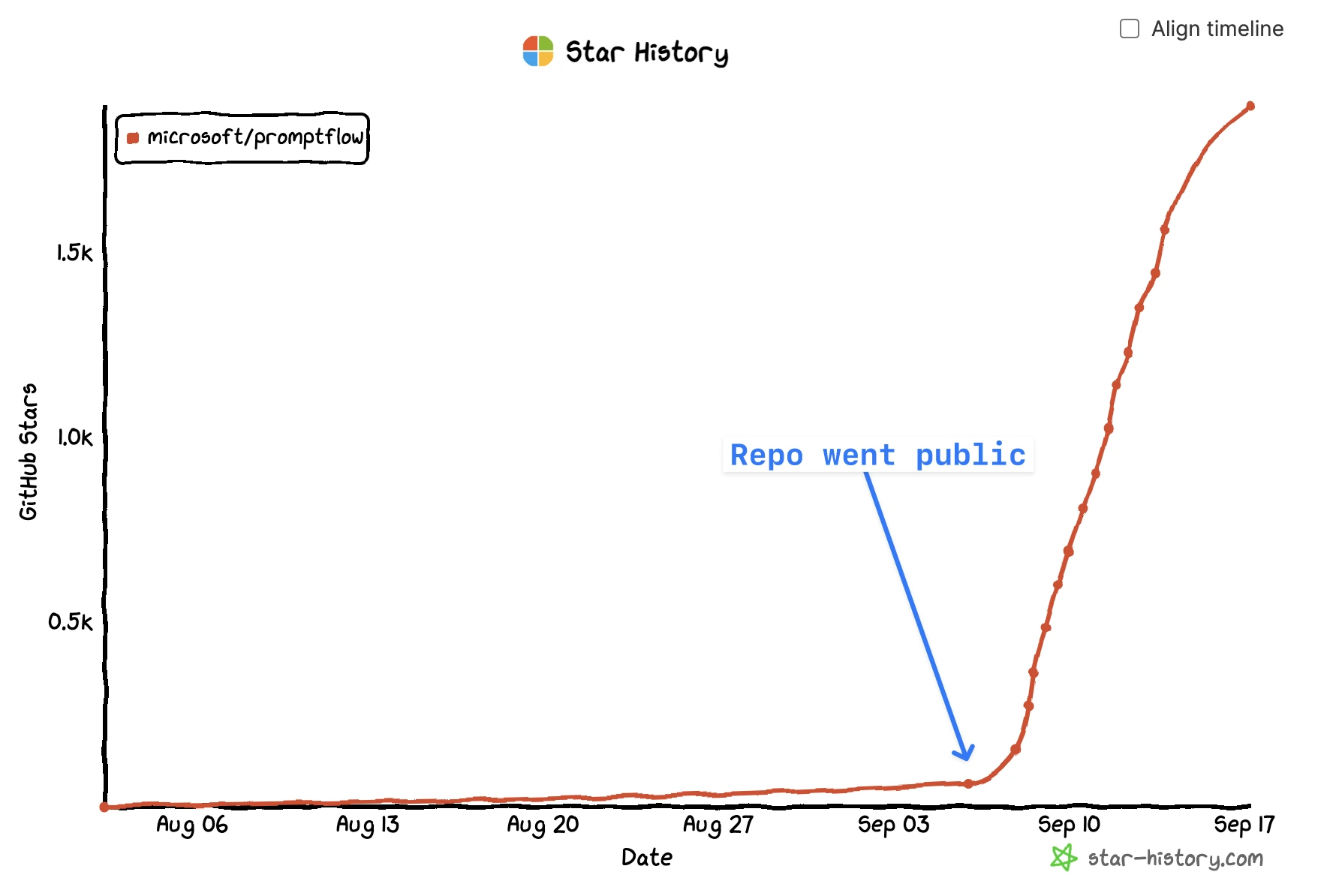

Star growth

As for its popularity, Prompt flow was publicly released in early September and witnessed a significant increase in interest, accumulating 2,000 stars within the first two weeks🚀.

Bytebase- Database DevSecOps for MySQL, PG, Oracle, SQL Server, Snowflake, ClickHouse, Mongo, Redis