Star History Monthly Pick | Llama 2 and Ecosystem Edition

On July 18th, Meta released Llama 2, the next generation of Llama. It can be freely used for research and commercial purposes, and supports private deployment.

Therefore, we have located a few open-source projects to help you quickly get started with Llama 2 on your own machine, regardless of what it is!

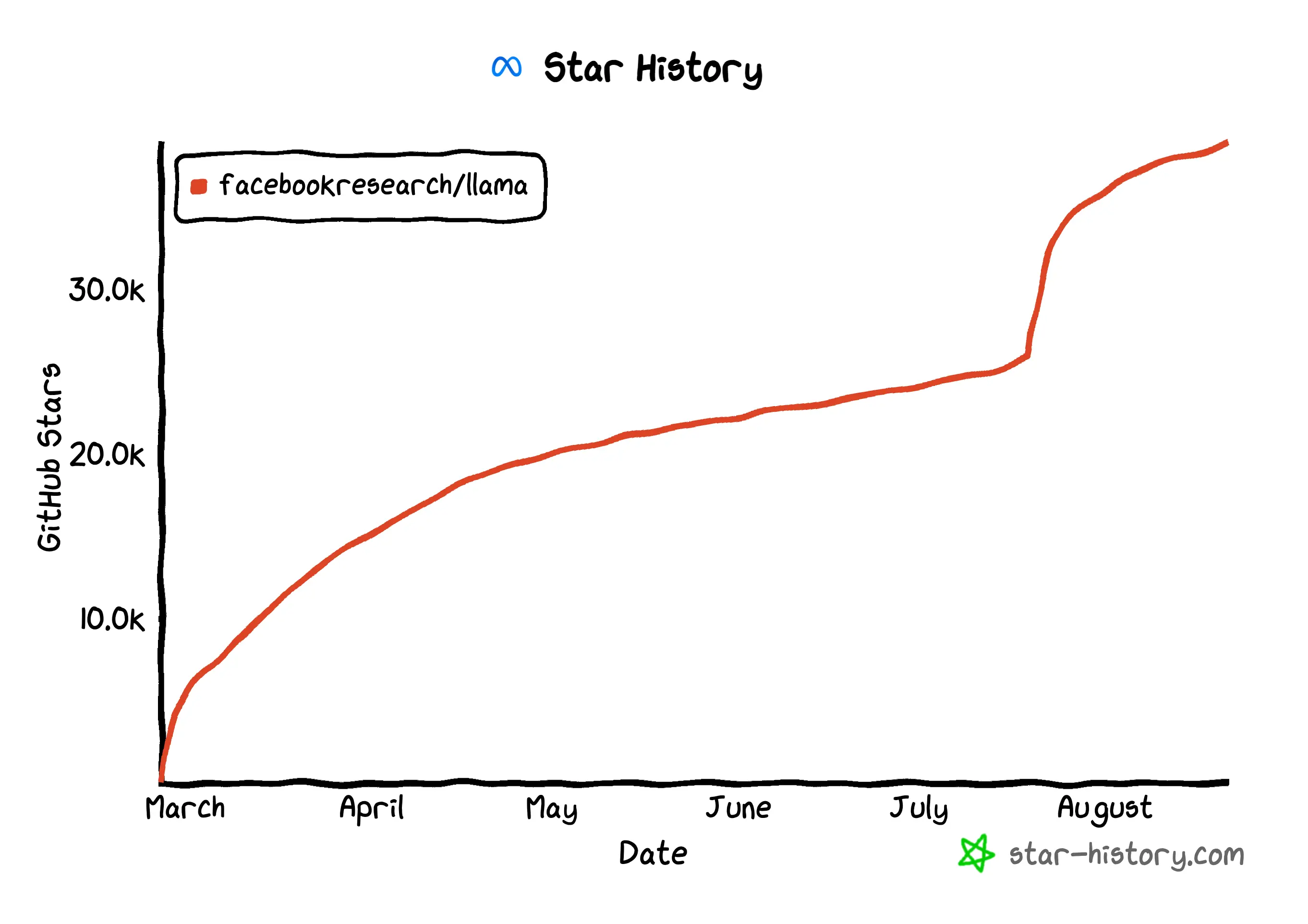

Llama

Llama itself is an open-source Large Language Model (LLM), trained with publicly available data. It was officially open-sourced earlier this February and five months later, a new generation was released.

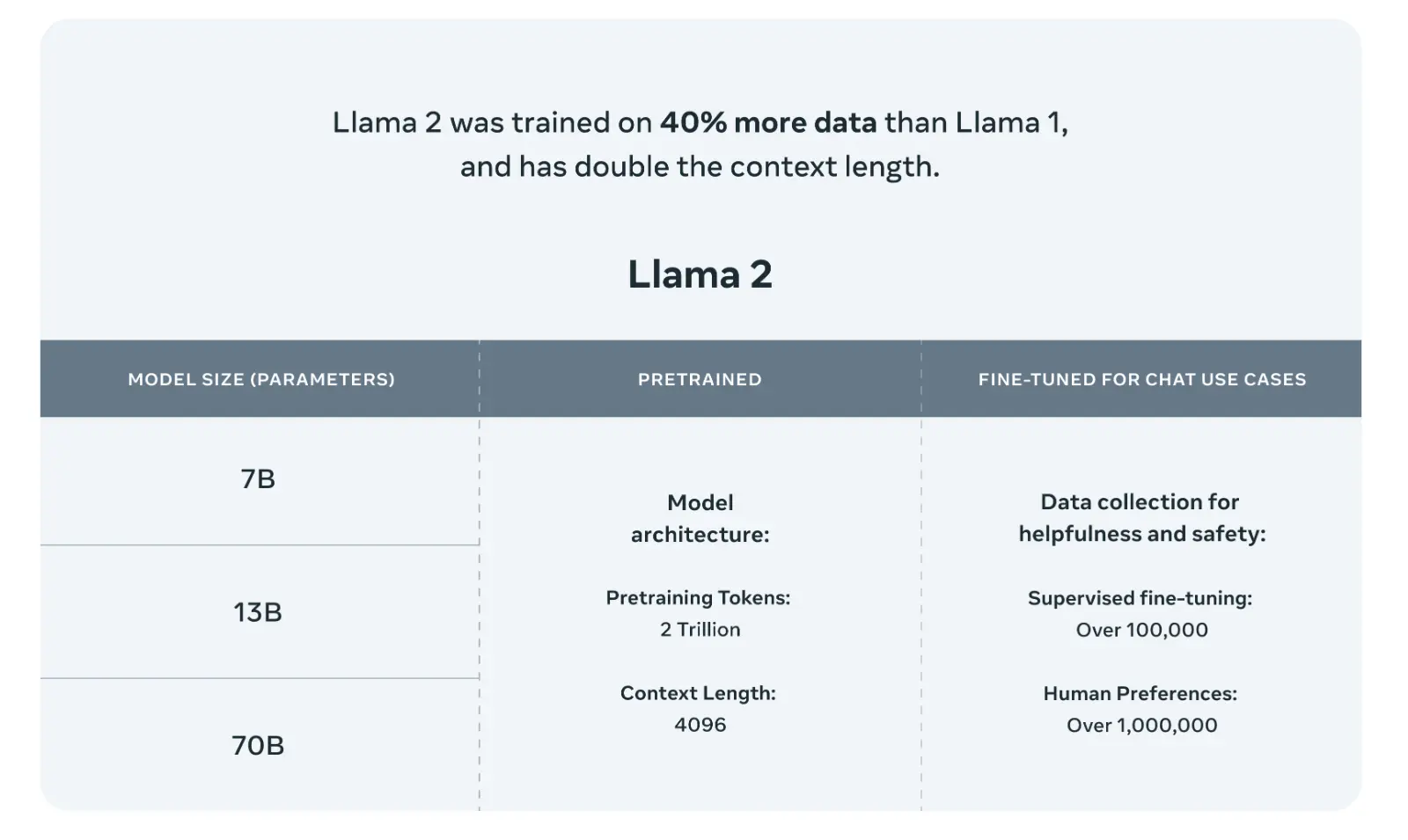

Compared to the original version, Llama 2 was trained on 2 trillion tokens, have double the context length than Llama 1, and comes with three different parameter sizes: 7B, 13B, and 70B. The difference in parameters allows you to choose between a smaller and faster model or a more accurate one based on your preferences.

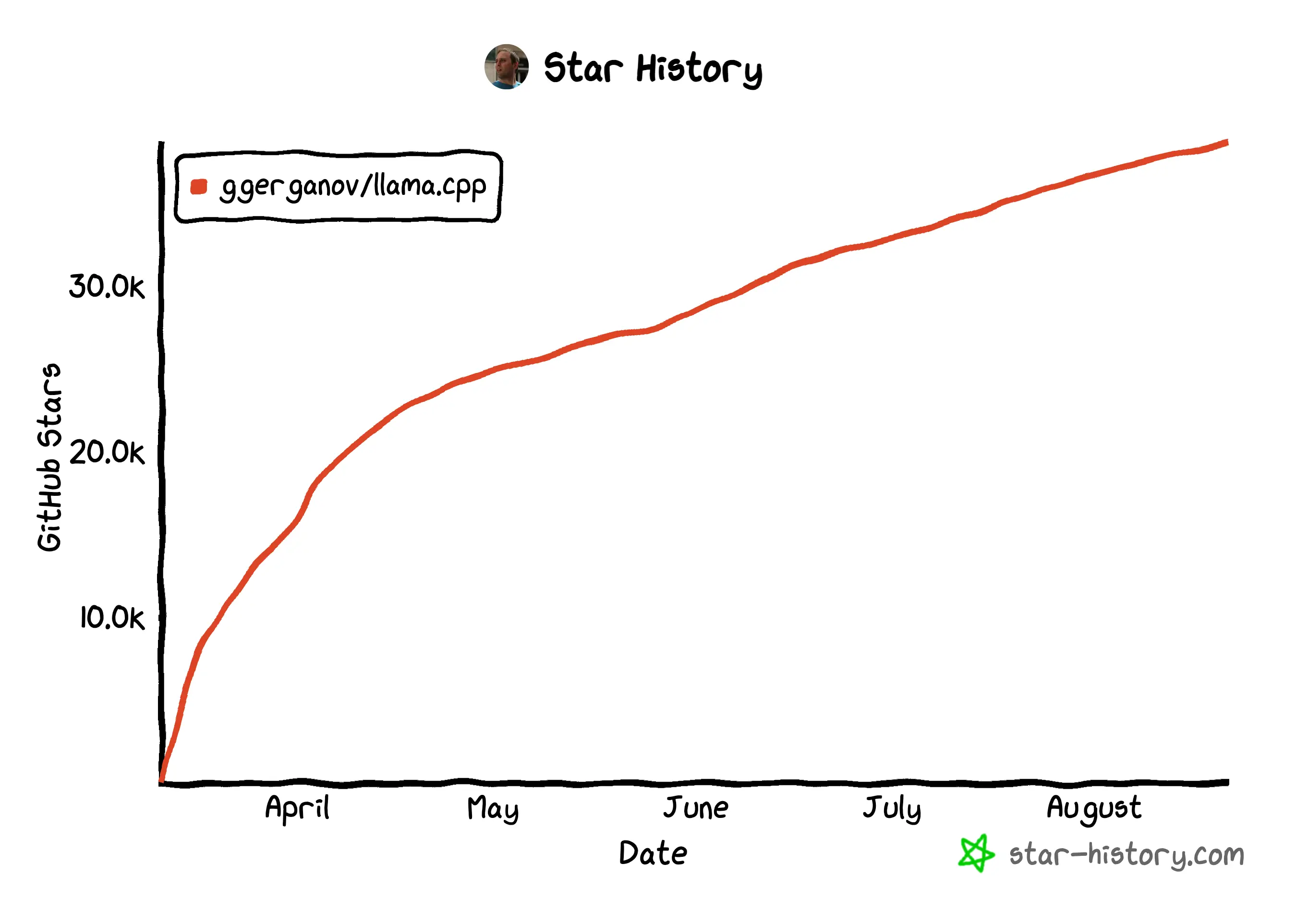

llama.cpp

llama.cpp is one of the achievements by the community mentioned in Meta's official announcement. It has rewritten Llama's inference code in C++, and through various optimizations, it has challenged our understanding: it can run large-scale LLMs quickly on ordinary hardware. For example:

- On the Google Pixel5, it can run the 7B model at 1 token/s.

- On the M2 Macbook Pro, it can run the 7B model at 16 tokens/s.

- On Raspberry Pi with 4GB RAM, it can run the 7B model at 0.1 token/s.

This project is so successful that the author, Georgi Gerganov, established his side project as a startup called ggml.ai (a tensor library for machine learning, powering both llama.cpp and whisper.cpp).

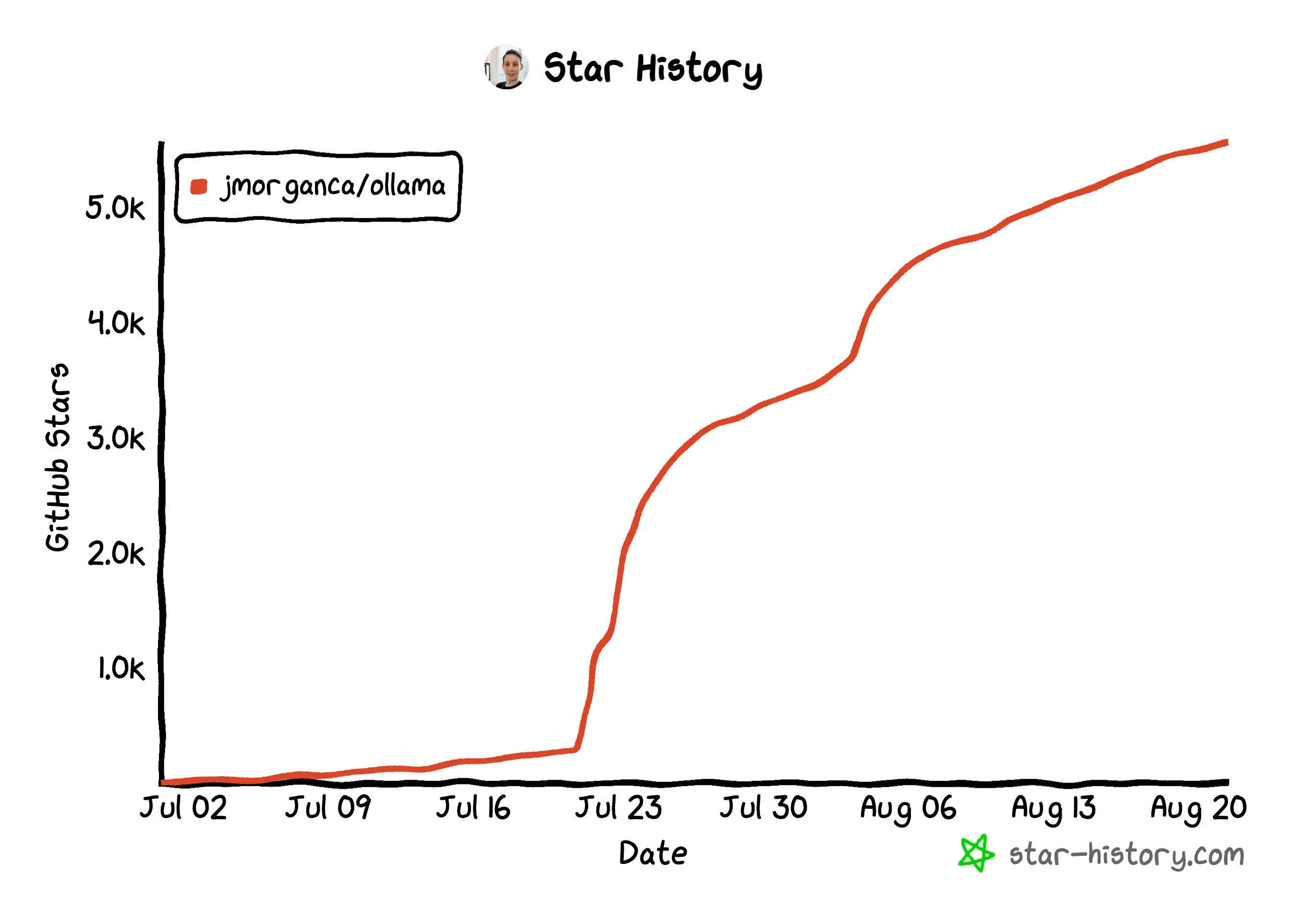

Ollama

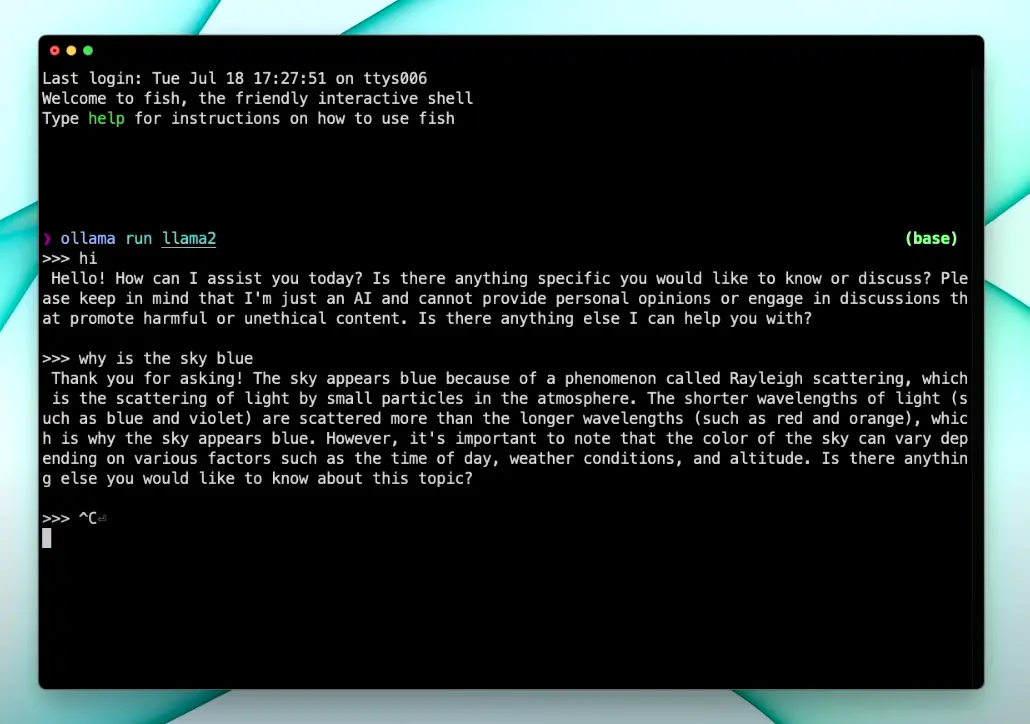

Ollama is designed to run, create, and share LLMs easily. It was originally designed for macOS, (with Windows and Linux coming soon, as per their website).

Ollama's author previously worked at Docker, and the rise of open-source language models inspired him that LLMs could use something similar. This led to the idea of providing pre-compiled packages with adjustable parameters.

Once you have downloaded Ollama on your Mac, you can start chatting with Llama 2 by simply running ollama run llama2.

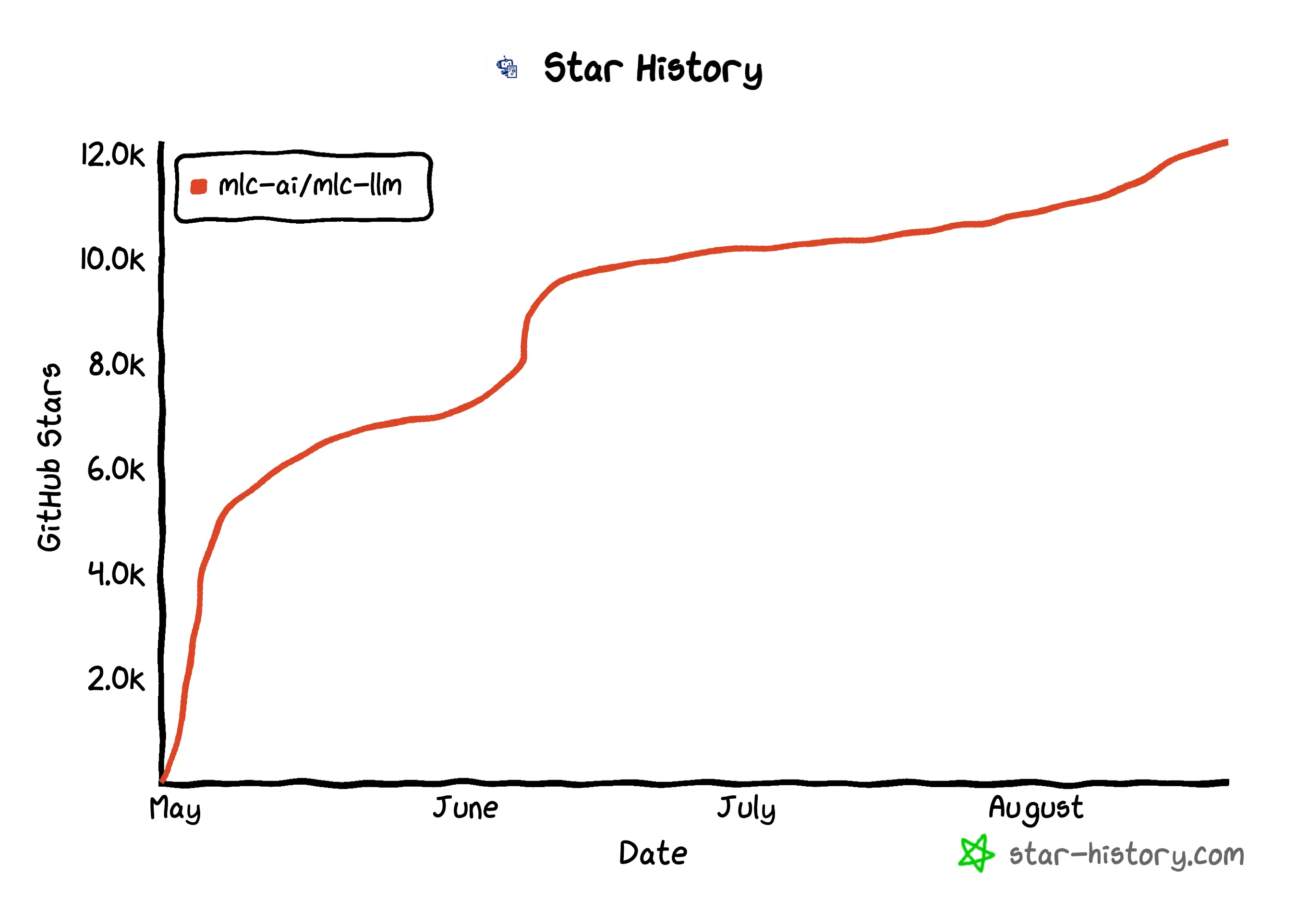

MLC LLM

MLC LLM aims to enable you to develop, optimize, and deploy AI models on any device. You can natively deploy any LLM on a diverse set of hardware backends and native applications (supported devices include mobile phones, tablets, computers, and web browsers) without the need for server support. You can also further optimize the model performance to suit your own use cases.

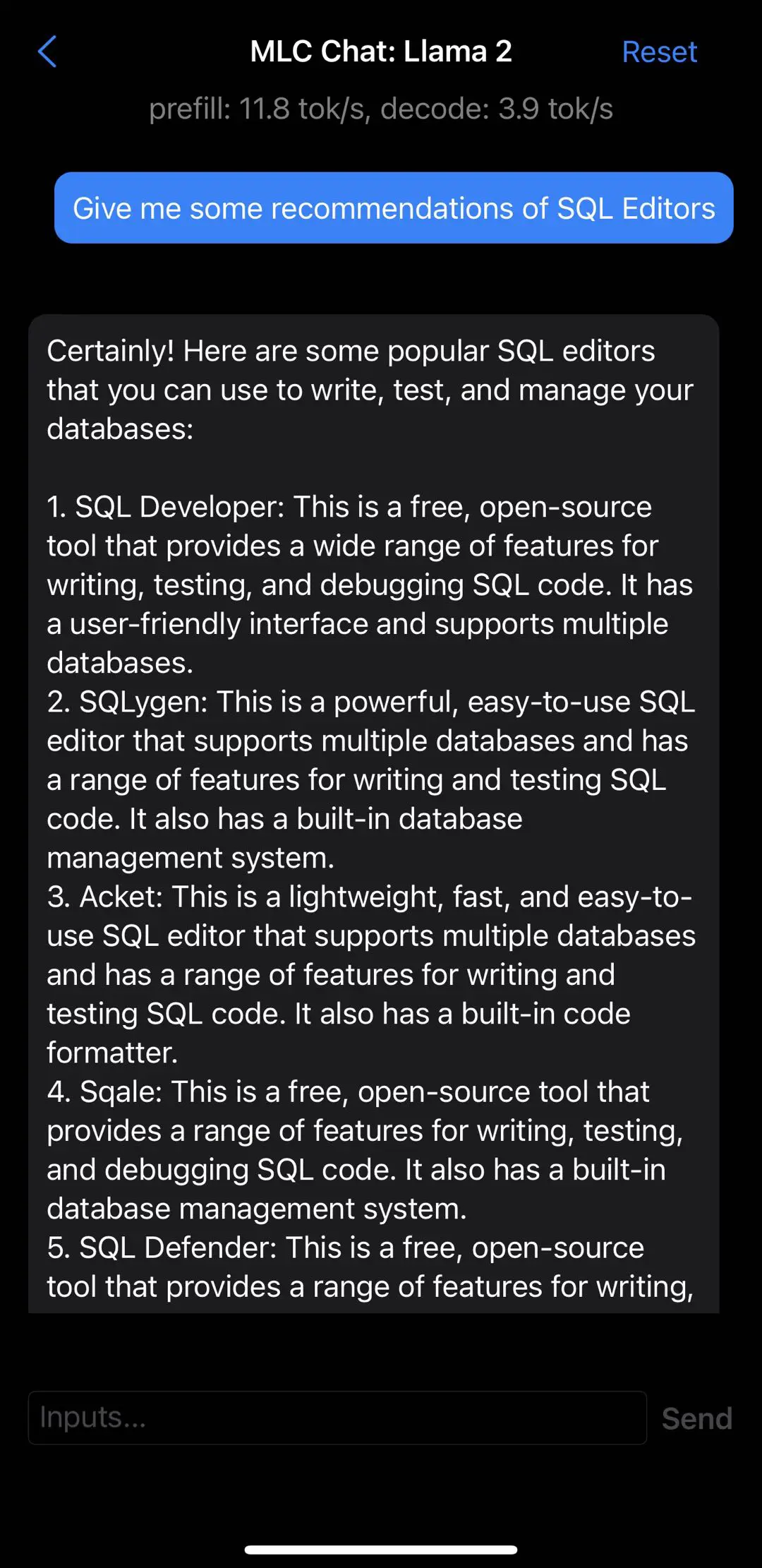

MLC Chat already launched on the Apple App Store and now supports the Llama-2-7b model. It is simple and super easy to get started with, although my iPhone got really after 3 questions 😅 (Side note: looks like Llama 2 still has a lot of room for growth tho, is any of these SQL Editors real?).

LlamaGPT

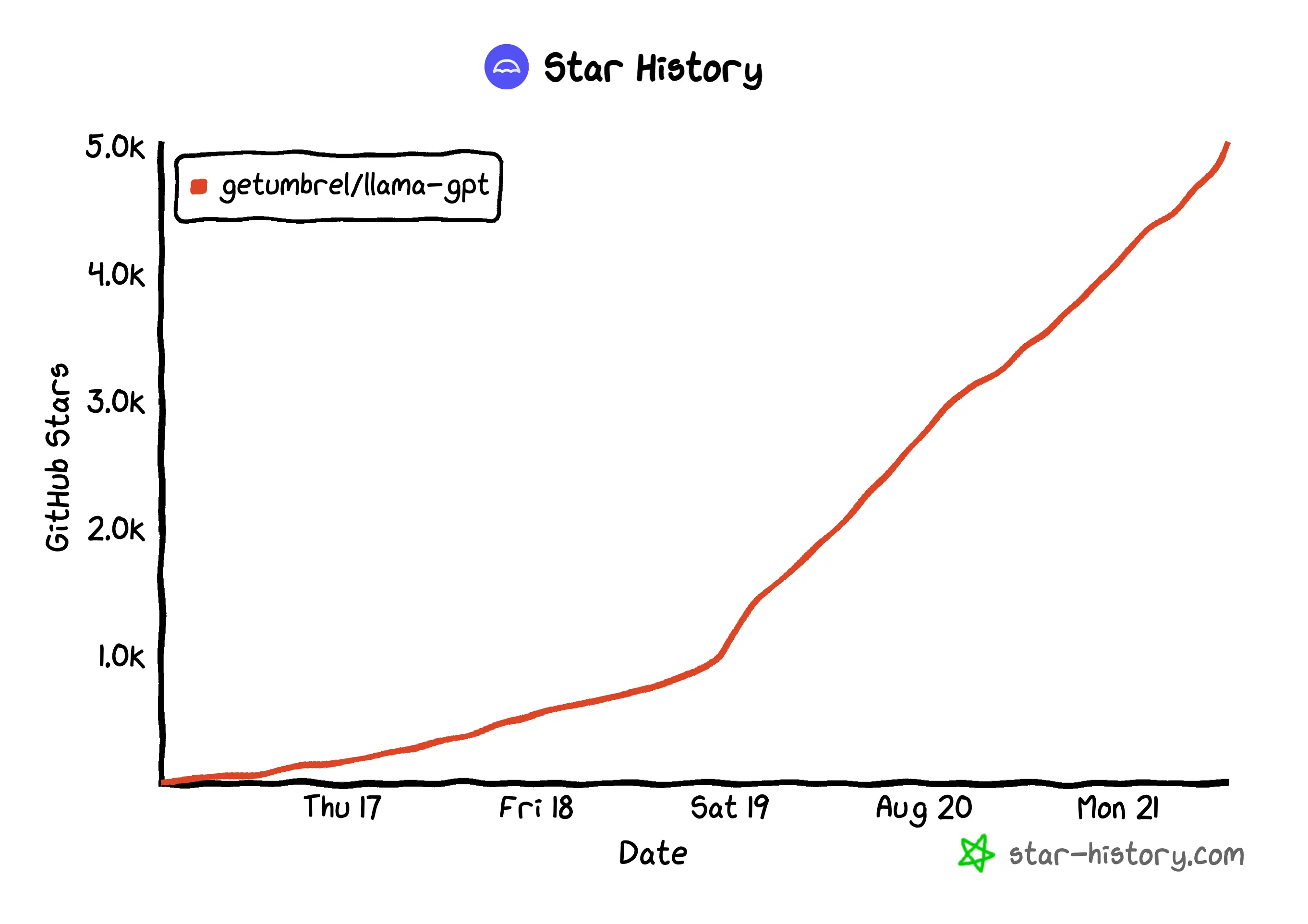

LlamaGPT has proven that the AI tide is still at its highest, as it has already gained 6.6K stars on GitHub just five days after being open-sourced.

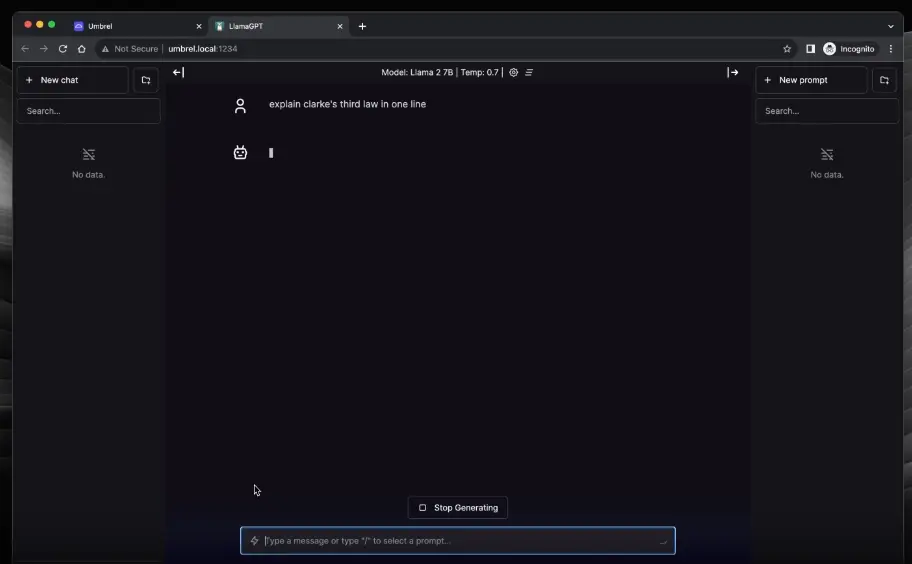

It is a self-hosted chatbot that offers a similar experience to ChatGPT but does not transmit any data to external devices. Currently, all three models of Llama are supported, and llama.cpp is utilized in the backend (all hail open source).

Compared to the aforementioned tools, LlamaGPT is a more complete application with a UI and does not require manual configuration or optimizing parameters. This makes it the most friendly for non-technical users to get started with Llama 2.

Last but not least

As an open-source, free, and commercially available LLM, Llama has brought AI closer to us. Although it may not be as advanced as other paid models, just like Meta mentioned in the press release, "We have experienced the benefits of open source, such as React and PyTorch, which are now commonly used infrastructure for the entire technology industry. We believe that openly sharing today’s large language models will support the development of helpful and safer generative AI too." With the power of the community, Llama and its ecosystem will surely continue to iterate (quickly).

But of course, there are many other ways to start using Llama 2, via Homebrew, Poe, etc. For some further reading:

- Run Llama 2 on your own Mac using LLM and Homebrew

- Llama 2 is here - get it on Hugging Face

- A comprehensive guide to running Llama 2 locally

AND: the Starlet Issues

Another piece of news for the month of July: we started a new column "Starlet List". If you are an open-source maintainer and would like to promote your project (for free!), shoot us an Email at star@bytebase.com, and tell us how your project wants to be presented to the audience.

In the meantime, check out the July starlets:

Bytebase- Database DevSecOps for MySQL, PG, Oracle, SQL Server, Snowflake, ClickHouse, Mongo, Redis