Starlet #10 PostgresML: The GPU-powered AI application database

This is the tenth issue of The Starlet List. If you want to prompt your open source project on star-history.com for free, please check out our announcement.

PostgresML cuts the complexity from AI so you can get your app to market faster. It’s the first GPU-powered AI application database – enabling users to build chatbots, search engines, forecasting apps and more with the latest NLP, LLM and ML models using the simplicity and power of SQL.

Problem

PostgresML provides all the functionality I wish I had working as a machine learning engineer at Instacart and scaling the company's data infrastructure. We needed a platform to quickly and reliably implement our online systems like our site search engine and real time fraud detection - crucial functions for ecommerce.

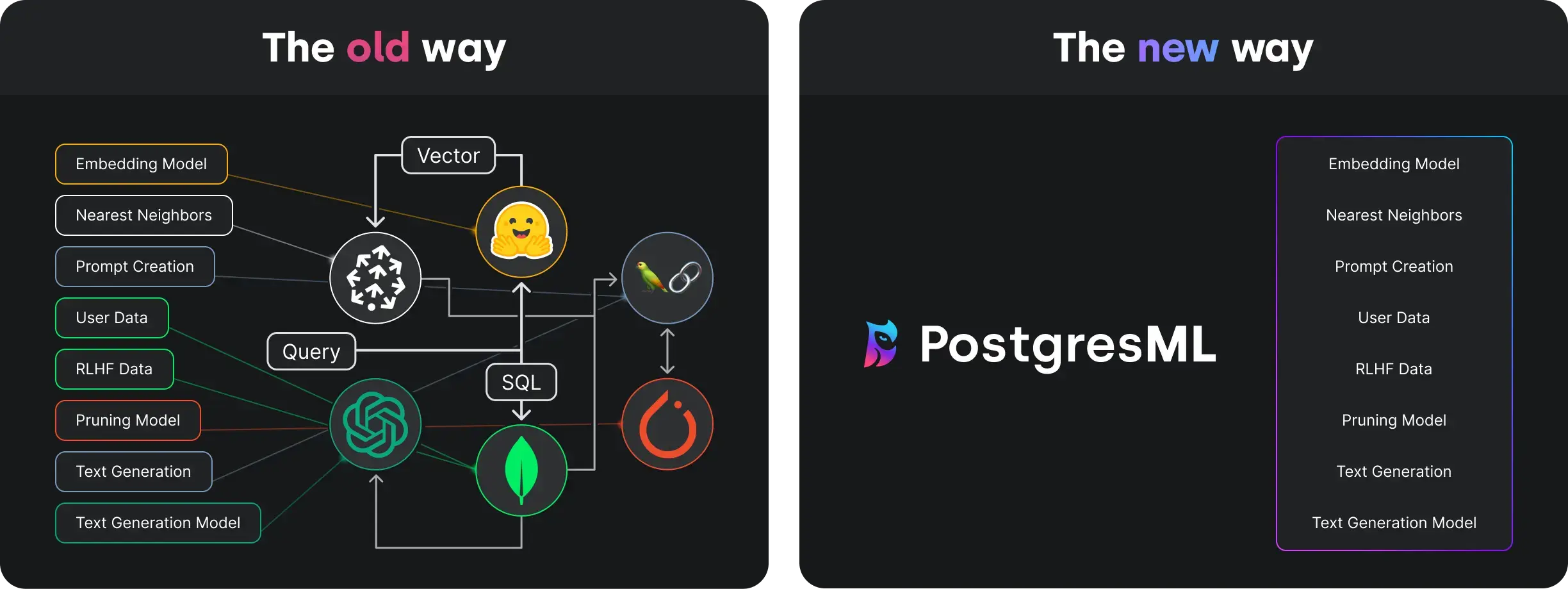

Today’s machine learning apps and their practitioners are encountering the same problems with complex and data hungry ML & AI apps. A leading cause of slowdowns is network latency due to microservices architecture. Several roundtrip network calls must be made across multiple services in the MLOPs ecosystem to return an accurate response. It’s slower for users, and it’s more complexity for engineers and product teams to build and manage.

Who wants to wait more than a few seconds for search results or a response from a chatbot? And what engineer wants to manage more complexity?

Solution

PostgresML collocates data and compute, so you can save models and index data right in your Postgres database. When you need to predict or train using machine learning, you don’t have to make requests over the internet or even take your data out of your database - which is both slower and a data safety risk. We’ve even added GPUs to our databases to fully leverage the latest technology and algorithms. You can avoid the complexity and latency of microservices when you have a complete MLOPs platform right in your database.

Instead of patching together MongoDB, Databricks, Pinecone, Huggingface, LangChain and more to get the latest algorithms and LLMs in your app – you can add one extension to your Postgres database and get to market quickly. Here’s what a typical chatbot build looks like with and without PostgresML:

Why Postgres? If it’s not broke, don’t fix it. Postgres is old reliable for a reason. It’s highly efficient, scalable and open-source (Just like all of PostgresML). Plus, you can easily build your AI app using simple SQL.

➡️ Scalability

In ML applications, new data is constantly being generated by users and needs to be stored somewhere (feature store). For example, there are long term historical features, short term session level, and real time request level. Postgres can handle both long term with partitioning, table spaces and indexing as well as short term storage, and accept real-time features as parameters in queries, or as additional session level storage already written during the request.

➡️ Efficiency

In database-ML minimizes latency and computational cost. Postgres can also handle all types of data efficiently; including vectors, geospatial, json, timeseries, tabular and text. Our benchmarks show up to 40x faster improvement over Python microservices.

➡️ All the latest LLMs, ML + AI algorithms

- Torch

- Tensorflow

- SCikit Learn

- XGBoost

- LightGBM

- Pre-trained deep learning models from Hugging Face

- LLama 2

- Falcon LLM

- OpenAI

➡️ Open-source

We’re a totally open-source project. That includes various ML libraries and the vast Postgres ecosystem.

Examples

Train

SELECT pgml.train(

'Sales Forecasting',

task => 'regression',

relation_name => 'historical_sales',

y_column_name => 'next_week_sales',

algorithm => 'xgboost'

);

Deploy

SELECT pgml.deploy(

'Sales Forecasting',

strategy => 'best_score',

algorithm => 'xgboost'

);

Predict

SELECT pgml.predict(

'Sales Forecasting',

ARRAY[

last_week_sales,

week_of_year

]

) AS prediction

ORDER BY prediction DESC;

Future Development

PostgresML is in early stages of development - but we are moving quickly. It’s our vision to help as many app developers as possible build and scale AI apps without all of the complexity.

If you’d like to stay up-to-date with our progress or contribute to the project (feedback, questions and comments are all welcome) you can check us out on Github or chat with me personally anytime on our Discord.

Bytebase- Database DevSecOps for MySQL, PG, Oracle, SQL Server, Snowflake, ClickHouse, Mongo, Redis